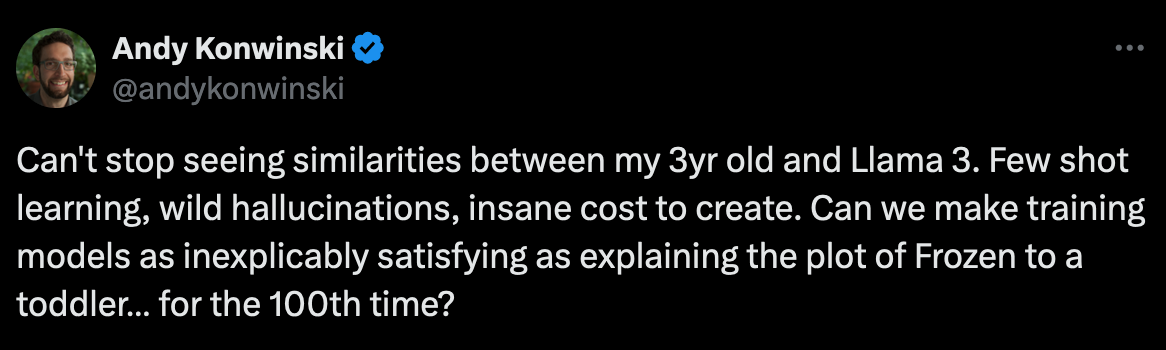

LLMs are like toddlers

I think my 3yr old and Llama 3 were separated at birth. The way training my daughter involves (verbally) collecting a dataset of thousands of examples. The way she sometimes needs only one example, sometimes dozens. The way she hallucinates (e.g., while learning to count, she skipped “13” for months).

However, thanks to my biological imperitive as a dad, I find it deeply satisfying to spend hundreds of hours generating a bespoke training dataset for her in the form of me continuously explaining how the world works (e.g., today we covered the difference between smoke and steam). That dataset will never be written down and can never be used to train another neural network besides my 1 year old overhearing it all. Whereas for LLMs, the training set is larger, noisier, mostly static, and I don’t feel the same emotional drive to improve it.

Maybe we should hack our biology by making LLMs look or behave more like small children. Or maybe I should sprinkle Raspberry Pi’s all over my house that transcribe all of our conversations into a dataset for pre-training?